Interplay of Realities: A Hybrid Planning Tool

By Nazli Tatar and Nuri Miller

CO Architects continues to investigate technologies that support collaborative decision-making for the design of high-value spaces. One of our key goals is to remove any friction to user adoption through more intuitive and playful interfaces. Our highly manipulatable virtual reality operating rooms now push boundaries by mixing the physical and virtual. In this new iteration, mixed reality operating rooms showcase user interaction with physical scale models tracked by cameras using Computer Vision.

Why Build It?

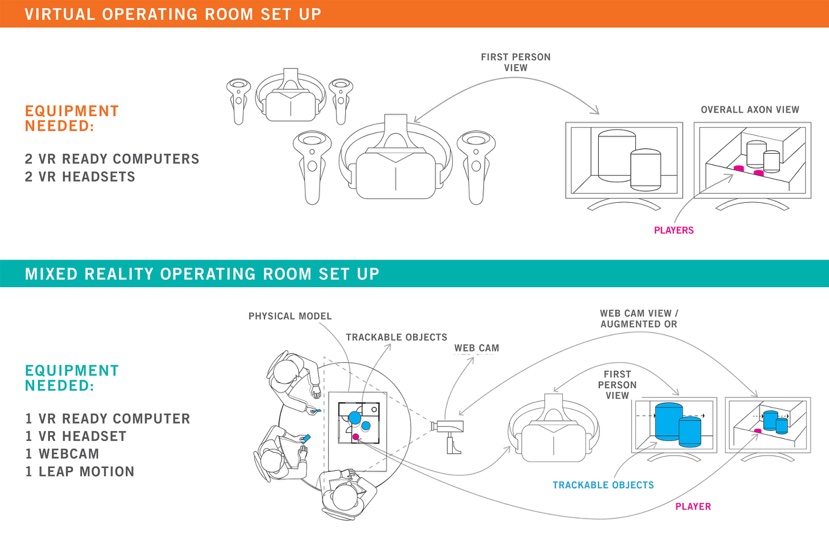

In initial user-testing, we observed that most users found the VR controllers to be challenging, unless they had some form of gaming experience. We addressed this by creating a training environment in which users could learn the functions for the various buttons and toggles on the HTC Vive controllers. Still, we recognized that the controllers were a potential barrier between the users and the experience. With this second version, we hope to reach a wider audience by replacing the controllers with physical representations of the virtual objects. This “digital twin” allows a group of users to re-configure a space and understand it immediately at both a model scale and real-world scale within VR.

How It Works

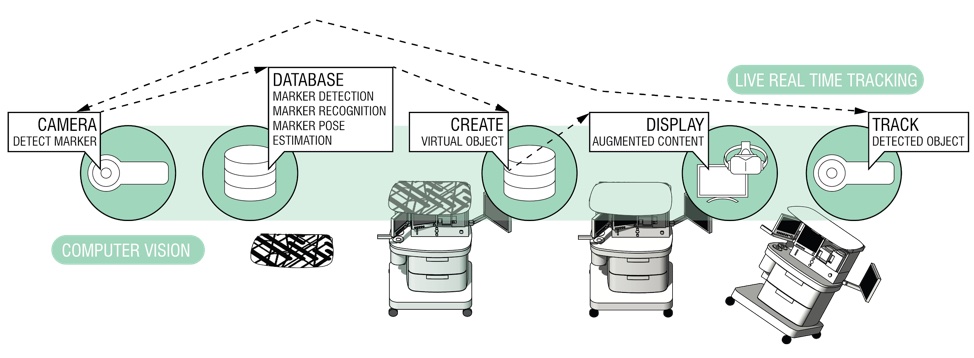

The scaled physical model is tracked by a webcam, which feeds each object’s location data to the computer in real time. Each object is assigned a unique marker for the computer to distinguish. If an object with a marker is moved to a certain location in the model, the virtual representation of that model will move simultaneously to the same location. Because of this seamless real-time connection, users without VR headsets can still be part of the experience by manipulating the physical model. In addition to furniture and equipment, characters are also represented in the model so that one can navigate the room by simply moving the physical character.

How We Built It & Key Features

The Unity gaming engine has been our primary environment for developing these custom experiences. It continues to provide an open and flexible platform for interactive design with a wealth of connections to third-party tools. In developing this new interactive experience, we were able to use a number of Computer Vision technologies within Unity to track physical objects in real-time.

First, we store unique visual markers for each physical model. Then, we create links between the physical markers and the virtual assets. This allows us to identify specific patterns from the camera’s live stream and determine an object’s position and orientation relative to Unity’s coordinate system.

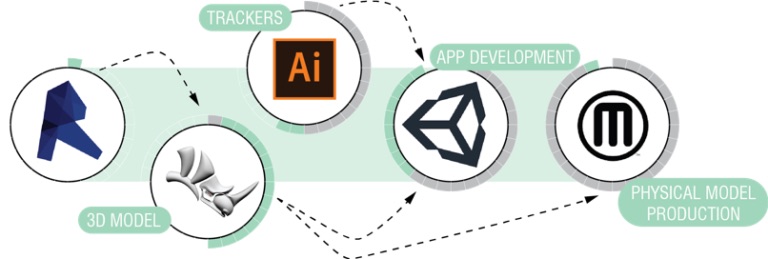

Our setup process begins by exporting a building model from Revit. Any model cleanup and 3D print preparation is then done in Rhino. We carefully match up the scale of the 3D printed objects with their unique markers in the virtual environment. Linking between the physical models and virtual assets is completed in Unity.

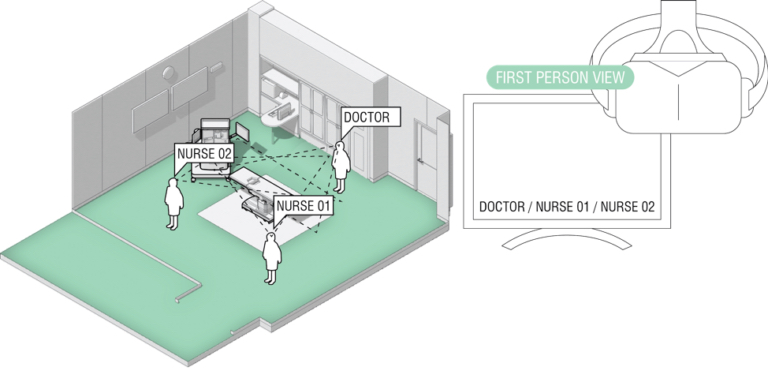

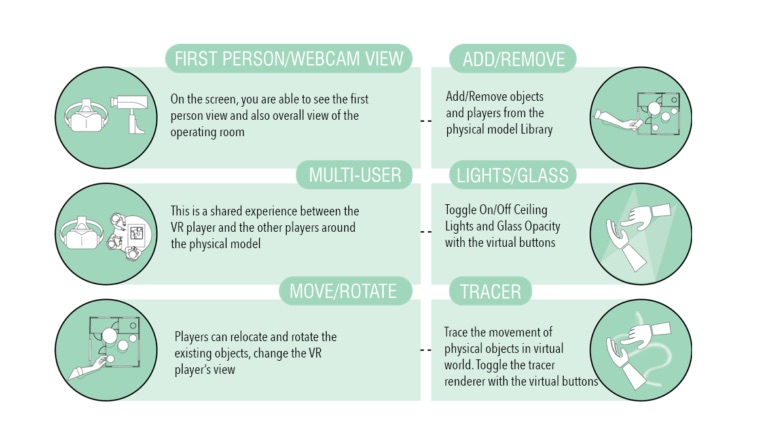

We place several different characters within the virtual scene, including two nurses and a doctor. The VR user can switch between characters and view the scene from different perspectives to see different points of view. To navigate the viewer, the user can simply move and rotate the representation in the physical model.

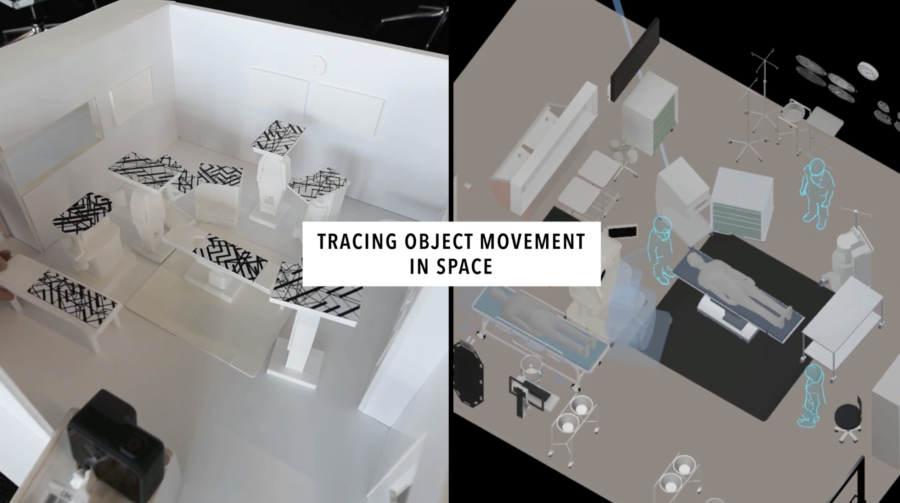

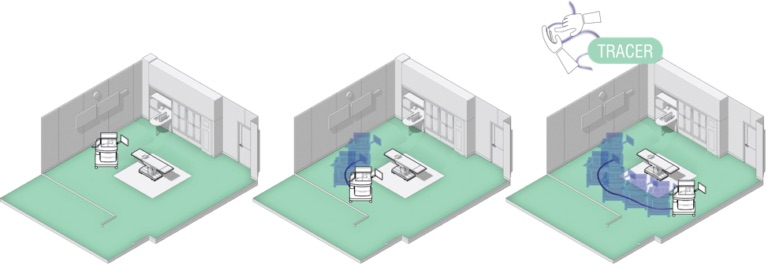

Considering the ever-changing and tight nature of the operating room space, it is important that we visualize how equipment may move through it. We incorporated a “tracer” feature that records the movement of objects and allows the users to see it as a ghosted 3D zone within the virtual space.

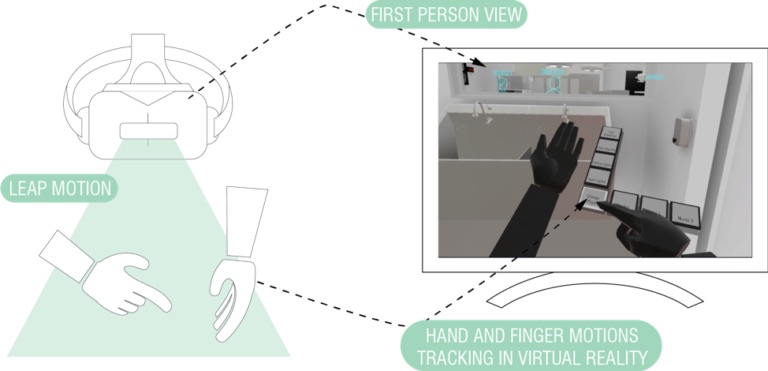

Much of the interaction previously provided by the VR controllers is now controlled through the physical model. We implemented a virtual pop-up display attached to the user’s hand when a button feature is required, like to toggle lights or turn the “tracer” feature. We used the Leap Motion hand-tracking device to show the user’s hands in virtual space and overlay a set of buttons a user could use without the need for a controller.

Primary Features of the Mixed Reality Operating Room

One of our hopes for this project – and other interactive tools under development at CO – is that more self-led exploration by project stakeholders will foster greater engagement and investment in the design. In order for this to happen, design interaction has to become more intuitive through advanced methods like using cameras and sensors to mix the physical and virtual. Ultimately, decisions can be made faster and with increased confidence that everyone shares the same understanding and design vision.